Creating Docker containers

Using something called a Dockerfile we can create our own container image which can include our own application. This Dockerfile can then be built into a Docker image to be started as a container locally or to be pushed to Docker Hub.

Creating your own Docker image

We will base ourselves on this example. Our randomizer.py application file is located in a subdirectory called app. The Dockerfile itself needs to be called Dockerfile, with no extension. It has the following contents:

FROM python:3.10.0-alpine

WORKDIR /code

EXPOSE 8000

COPY ./requirements.txt /code/requirements.txt

RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt

COPY ./app /code/app

CMD ["uvicorn", "app.randomizer:app", "--host", "0.0.0.0", "--port", "8000"]

FROM python:3.10.0-alpine, dictates what base image should be used. For starters we will base the image on one where Python is preinstalled. The default image is based on a version of Linux that makes the image quite big at 300Mb+. We will opt to use Alpine distro of Linux instead, which is ideal to be used as a base for small application containers. The downside of these Alpine base images is that a lot of standard features have been cut, such as basic tooling likegccwhich is often used to build pip modules. An alternative to this is the slim-buster, which is a slimmed down Debian that does havegcc.WORKDIR /code, creates a subdirectorycodeinside the container filesystem andcd's to it.EXPOSE 8000, exposes the needed port on the container so that we will be able to connect through it.COPY ./requirements.txt /code/requirements.txt, copies therequirements.txtfrom the directory to thecodedirectory inside the container.RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt, installs the needed libraries, etc. inside the container based on therequirements.txtfile we just copied into it.COPY ./app /code/app, copies the contents of theappsubdirectory which holds ourrandomizer.pyapplication file, into the container while creating aappsubdirectory in thecodedirectory of the container.CMD ["uvicorn", "app.randomizer:app", "--host", "0.0.0.0", "--port", "8000"], indicates which process should be started by default when the container starts. The parts of the command which are usually seperated by spaces now become seperated values. Please note theapp.randomizerwhich reflects thatrandomizer.pyis located in theappsubdirectory ofcode, where the container "command line" is now located during the build process.

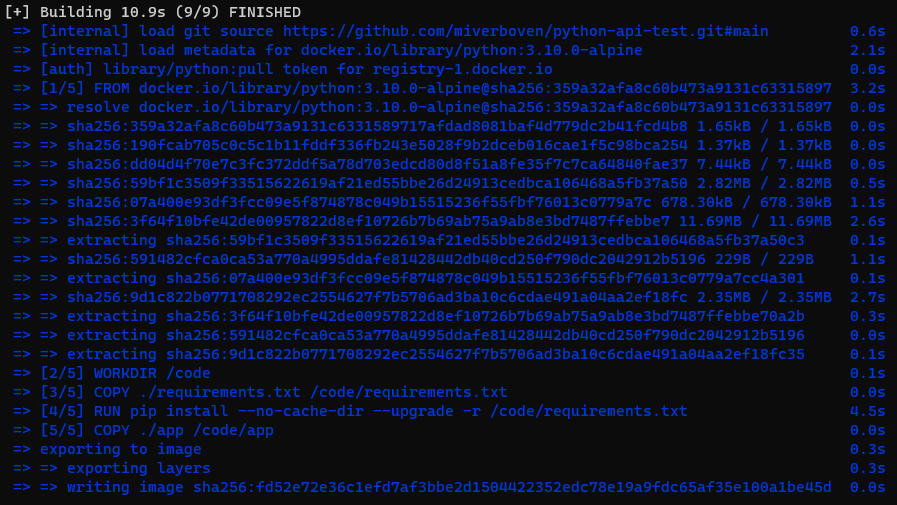

At this point we can build the image using Docker build:

When we are located on our own system, in the directory that holds the Dockerfile we just made we can use the command:

docker build -t <INSERT YOUR DOCKER HUB ACCOUNT NAME>/python-api-test:latest .

Whereby -t is followed what the tag of the Docker image must become. It is best practice to make it a combination of your own Docker Hub account name and the name of the image, followed by a version tag.

When we would like to refer to a git repository that holds our code and Dockerfile in the root of the repository on the main branch we can use the following:

docker build -t <INSERT YOUR DOCKER HUB ACCOUNT NAME>/python-api-test:latest https://github.com/miverboven/python-api-test.git#main

Creating a GitHub Actions pipeline to build and push the image

When our code is present in a GitHub repository we can make use of GitHub Actions to automate the Docker image build process to run whenever we, for example, push new code to the repository. This is also included in our example:

name: Deliver container

on: push

jobs:

delivery:

runs-on: ubuntu-latest

name: Deliver container

steps:

- name: Check out repository

uses: actions/checkout@v1

- name: Docker Login

run: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}

- name: Docker Build

run: docker build -t ${{ secrets.DOCKER_USER }}/python-api-test:latest .

- name: Upload container to Docker Hub with Push

run: docker push ${{ secrets.DOCKER_USER }}/python-api-test:latest

This pipeline runs when code gets pushed, on a temporary Ubuntu VM in the cloud to complete the following steps:

- Checking out the repository and copying that code into the VM by using a standard GitHub action.

- Running a CLI command

docker loginto login to our Docker Hub account, which we will need later to push the image to Docker Hub. - Running

docker buildin the same manner as we do locally. - Running

docker pushto push our image to our Docker Hub account.

The ${{ secrets.DOCKER_USER }} and ${{ secrets.DOCKER_PASSWORD }} are values which are extracted from the repository secrets on GitHub, representing your Docker Hub username and password. These can be added to the repository manually.

Docker push & Docker login locally

When you plan on pushing the new container image from your local machine instead of from a GitHub Actions pipeline, you should not forget to use docker login with your raw credentials in your CLI before using docker push.